What would success look like if we had to explain it to a new hire?

When someone new joins the team, they often ask deceptively simple questions.

“How do we know if we’re doing well?”

On the surface, that question is about clarity.

At a deeper level, it’s about coherence.

Having a new team member is a quiet forcing function that you can use to accelerate the team’s coherence. New hires don’t know your history, and they don’t know your internal shorthand. But maybe most importantly here, they don’t know which metrics are sacred and which are ceremonial.

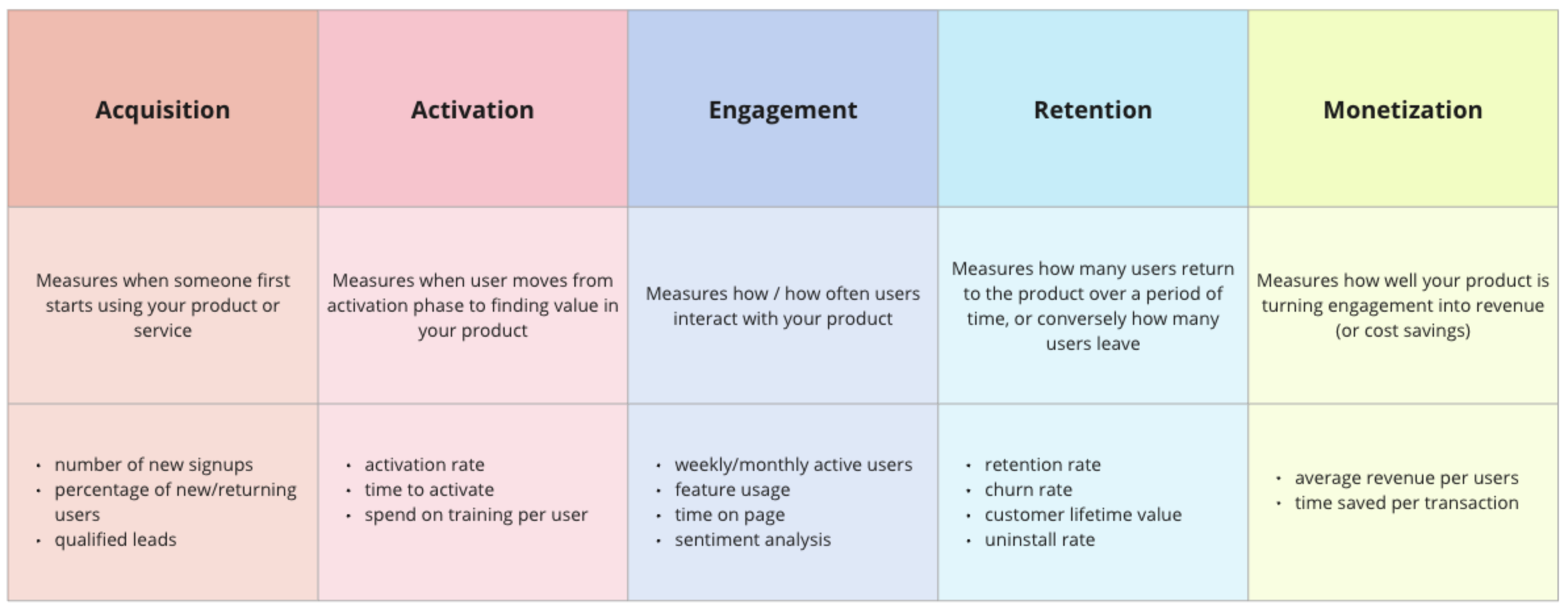

John Cutler often critiques “success theater”, where vanity metrics or dashboards look like they are creating signal, but actually generate noise. A new team member won’t know the difference right away. They’ll take what we present at face value.

Many teams can list metrics. Fewer can describe what winning actually feels like. And if explaining success requires a 40 slide deck, or a dozen KPIs and OKRs layered together, that’s a signal worth noticing. As Richard Rumelt has written, “Good strategy is simple enough to explain, but disciplined enough to execute.” If you can’t explain success simply, there’s a good chance your strategy is either fragmented or overly abstract.

STAND-UP EXERCISE

Use the idea of a new team member as a diagnostic lens.

Take a few moments asynchronously to write down how you would explain success of the team to a fictional new hire. Don’t just think about metrics, but also the meaning. What would you have them pay attention to? What matters most?

Then bring those explanations together and look for themes. Did different practice areas have distinct definitions? Did folks with varied lengths of tenure on the team explain it differently?

This exercise isn’t just about onboarding; it is about potential misalignment within the team. The places where definitions diverge are often the places where tension quietly lives. Use this to come together on a shared definition of success for your team and for your product. If success can’t be explained coherently, it can’t be protected intentionally.